About COG-MHEAR

This Programme Grant is funded by the EPSRC and will be undertaken by a multidisciplinary team of experts with complementary skills in cognitive data science, speech technologies, wireless communications, engineering, sensing, and clinical hearing science from Edinburgh Napier University, University of Edinburgh, University of Glasgow, University of Wolverhampton, Heriot-Watt University, University of Manchester, and the University of Nottingham. The world-class research team will be complemented by clinical partners and end-users ranging from leading global Hearing Aid manufacturers (Sonova), wireless research and standardisation drivers (Nokia Bell-Labs), chip design SME (Alpha Data), national Innovation Centres (Digital Health & Care Institute (DHI), The Data Lab) and Charities (Deaf Scotland (DS) and Action on Hearing Loss).

Background

Currently, only 40% of people who could benefit from Hearing Aids (HAs) have them, and most people who have HA devices don't use them often enough. There is social stigma around using visible HAs ('fear of looking old'), they require a lot of conscious effort to concentrate on different sounds and speakers, and only limited use is made of speech enhancement - making the spoken words (which are often the most important aspect of hearing to people) easier to distinguish. It is not enough just to make everything louder.

Objectives

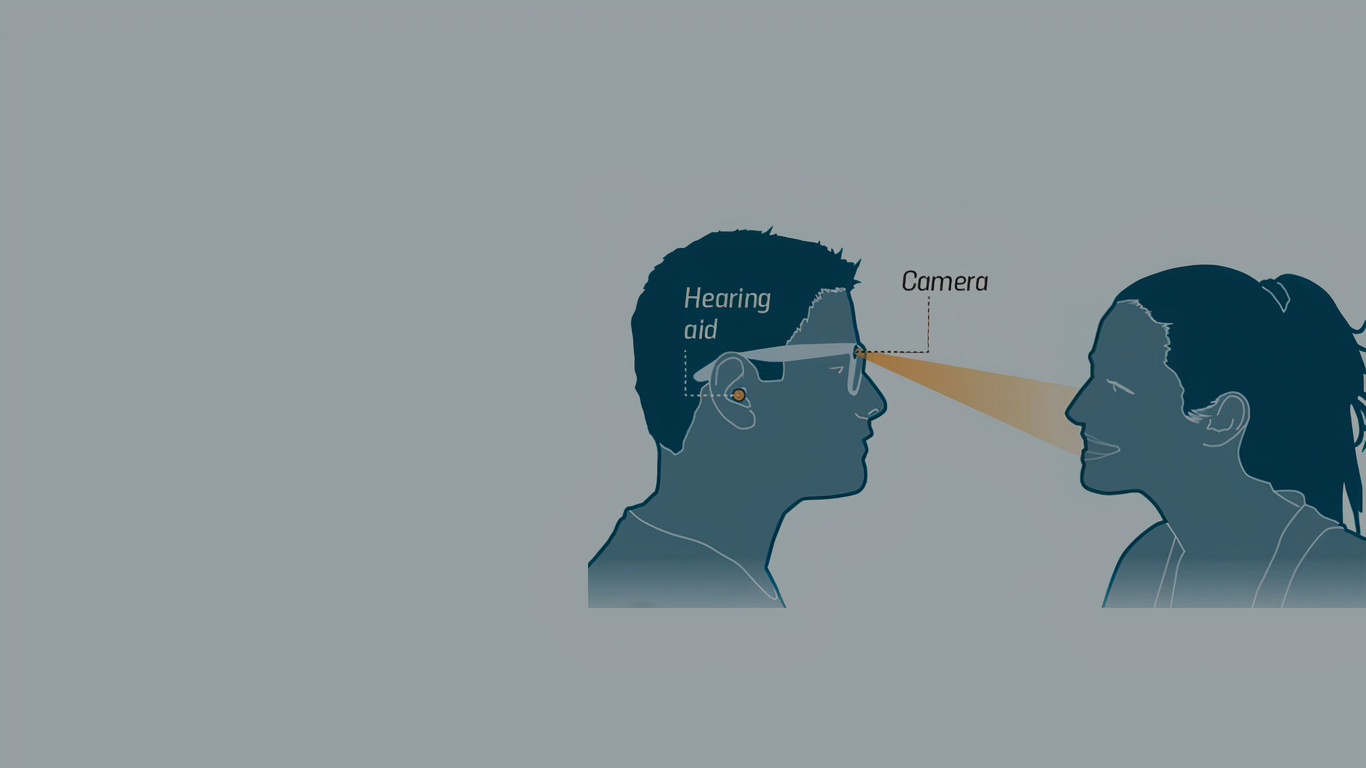

To transform hearing care by 2050, we aim to completely re-think the way HAs are designed. Our transformative approach will - for the first time - draw on the cognitive principles of normal hearing. Listeners naturally combine information from both their ears and eyes: we use our eyes to help us hear. We will create "multi-modal" aids which not only amplify sounds but also use information collected from a range of sensors to improve understanding of speech. For example, a large amount of information about the words said by a person is conveyed in visual information, in the movements of the speaker's lips, hand gestures, and similar. This is ignored by current HAs and could be fed into the speech enhancement process. We can also use wearable sensors (embedded within the HA itself) to estimate listening effort and its impact on the person, and use this to tell whether the speech enhancement process is actually helping or not.

Challenges

Creating these multi-modal "audio-visual" HAs raises many major technical challenges which need to be tackled holistically. Making use of lip movements traditionally requires a video camera filming the speaker, which introduces privacy questions. We can overcome some of these questions by encrypting the data as soon as they are collected, and we will pioneer new approaches for processing and understanding the video data while they remain encrypted. We aim never to access the raw video data, but still use it as a useful source of information. To complement this, we will also investigate methods for remote lip reading without using a video feed, instead exploring the use of radio signals for remote monitoring.

Adding in these new sensors and the processing that is required to make sense of the data produced will place a significant additional power and miniaturisation burden on the HA device. We will need to make our sophisticated visual and sound processing algorithms operate with minimum power and minimum delay, and will achieve this by making dedicated hardware implementations, accelerating the key processing steps. In the long term, we aim for all processing to be done in the HA itself - keeping data local to the person for privacy. In the shorter term, some processing will need to be done in the cloud (as it is too power intensive) and we will create new very low latency (<10ms) interfaces to cloud infrastructure to avoid delays between when a word is "seen" being spoken and when it is heard. We also plan to utilise advances in flexible electronics (e-skin) and antenna design to make the overall unit as small, discreet and usable as possible.

We are partnering with